SDelete – How to securely wipe SSD drives in Windows 7 – The Visual Guide

SSD drives actively avoid overwriting data due to wear-levelling and device under provisioning, which provide maximum functionality and speed. They are a privacy violation waiting to happen. So how do we counter SSD technology ?

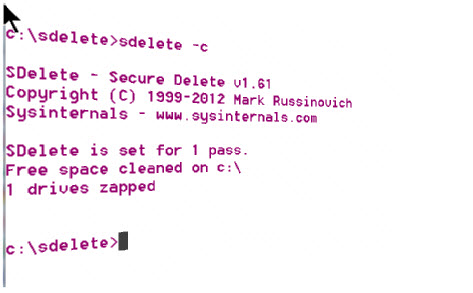

1. Windows 7 – Use SDelete

Download SDelete from Technet.

http://technet.microsoft.com/en-gb/sysinternals/bb897443.aspx

Make a c:\sdelete folder

Run a Command prompt and change directories to

cd c:\sdelete

sdelete -c {this will wipe free space}

sdelete -c {this will wipe free space}

sdelete -c c: {wipes free space on c drive}

sdelete -c d: {wipes free space on d drive}

Sdelete can run multiple passes and delete a specific directory

sdelete -p2 -s c:\Users\smile\Downloads

-p 2 = run 2 passes

-p 2 = run 2 passes

-s = delete this directory and its subdirectories for c:\Users\smile\Downloads

******

2. Linux – Use DD

DD is the most effective SSD drive wiping technology. DD is better than other tested drive wiping software.

And the results… were MEGA – DD had zero files found and zero loadable files. AWESOME!!

And the results… were MEGA – DD had zero files found and zero loadable files. AWESOME!!

3. EFF recommends Eraser – don’t use this

Both Eraser and Wipe proved the least efficient drive wiping software on SSD drives – leaving thousands of files on the drive when tested (3,866 files on test one, totaling 13925 MB).

ummh, so DD and Sdelete is it then.

****

Hiccups when cleaning SSD Drives – why are they a privacy concern?

So what’s going on with SSD drives… why is so much data being found on them?

Hiccup 1

Wear Leveling avoids data deletion at all costs… do you realise what this means for privacy? Because of the mean time between failure, it ensures data is evenly saved across the drive – to reduce the failure rates, but in doing so avoids overwrites or deletions. Yep, your data is kept forever… if the SSD has it’s way. Not so keen on SSD technology now are we!

Hiccup 2 – SSD have 2.2 GB more than a HDD (29.8 GB)

Your OS will assume it has 29.8 GB, whereas we have a lurking 2.2 GB excess in which files could be found. Oh this just gets better and better!!

Hiccup 3 – Writes in 4 KB pages but deletes in 512 KB Blocks.

Yeah, I know, tell me about it!!

So it writes in 4KB pages… but has to delete in 512 KB Blocks… and it avoids deletion due to wear leveling technology. My oh my, not good is it?

Known flaw of SDelete – misses file names located in free space – Sdelete is not perfect, but it is pretty good.

References

Secure State Deletion

http://ro.ecu.edu.au/cgi/viewcontent.cgi?article=1064&context=adf

This is almost all wrong…

Modern SSDs use TRIM to be informed of which blocks of data are no longer considered in use, so they can be wiped.

http://en.wikipedia.org/wiki/Trim_%28computing%29

This is a important function on SSD nowadays because block erasing is a expensive operation, so its better to do it before any write is requested, so write operations can be done directly without wiping blocks. So performance will be uniformly high whenever times you write/delete.

You may be aging your SSD doing “overtriming” or even worse, depending on SDelete way of operating…

there exist much more wrong information on the post… eraser block size is different from SSD to SSD. For example samsung EVO has an unusual 1536kb EBS(erase) and 8KB page size(write). Different from FS block size what can make SDelete more aging…

SDelete is outdated, is from 2013, so probably it ignore SSDs peculiarities… Moderns SSD don’t need much more then some settings to avoid aggressive write/caching on them, in rest they are completely managed by controller.

LikeLike

As the research papers found, you are totally dependent on the manufacturers coding of the erasure. How many manufacturers codes for erasure work when forensically tested.

The answer is surprisingly – that only 3 out of 12 drives were erased.

The conclusion of the academic paper was that we MUST NOT trust manufacturers erasure – or coding. Some drives will report a totally successful erasure, yet when analysed the drive had erased NOTHING. Nada. Nil.

As a user, it’s best not to trust any commands coded by a drive manufacturer.

LikeLike

What the paper expose is that before moderns SSDs with TRIM ATA command (the paper is from 2009 and tell readers that wasn’t possible to test any TRIM SSD), data wipe was an really obscure process and it is really true.

What is known is some procedures here can really blow your SSD lifespan and that TRIM is an ATA ISO command, non-queued (instantaneously) that, if implemented to its purpose by manufacturers (performance and write amplification) will issue block erasure before any other request to read/write…

TRIM is a lot more consistent and is better to investigate your drive nature than going directly with bad practices without necessity, if secure deletion is soo important.

A better investigation on the problem is:

http://articles.forensicfocus.com/2014/09/23/recovering-evidence-from-ssd-drives-in-2014-understanding-trim-garbage-collection-and-exclusions/

LikeLike

The paper stated that we must distrust manufacturers secure erasure – as in 2/3rd of cases, it just doesn’t work.

A manufacturer can claim it works… but that doesn’t mean that it does.

The other issue is that SSD’s generate so many copies of a file… up to 16 copies were found… the erasure of one copy, may still leave 15 copies on a disk.

The take away message is that you do not trust manufacturers claims. It would be interesting to see the EU testing these erasure codes under privacy laws – or making the erasure part of a legal EU Data Protection law – and fining SSD manufacturers if the erasure commands failed when tested.

LikeLike

Yes, I understood the paper, but as I said, this paper is at best *very old* and not much relevant nowadays. In light of other good and trusted sources of test, a lot of the paper claims are now a day, history, since practically all manufacturers use TRIM and that showed very clear behaviors in laboratory test (in practical terms the same kind of rescue tests made in the paper), concerning today’s technology.

A good number of SSDs nowadays gives or zeros or random values to the same block trimmed, in any access. Speed gains confirm TRIM effectiveness too as for long sustained good performance, attempting to write on deleted space, this one need to have its blocks reset. They point that maybe only manufactures would be able to restore anything in those cases, a clear *maybe*. What points to more possibilities, like any of the procedures here being trustful, as a lot of tests shows it all depends on what truly controller.

I understand too, that its of a really bad intention, pointing hazardous procedures without informing of possible damage, independent of intention… And worst if it cant be sure of its own results, as I pointed, manufacturers can directly access NANDs and obtain data that controller itself hide. And this is my first point, make what the page did not did, point that it may bee very hazardous to hardware, probably very unnecessary, you may have a hardware that TRIM behavior as intended in plenty 2015, and that isn’t sure that you will obtain the same safety as an HD as SSD have controllers doing a massive abstraction of real data management.

In the end is important to point if you’re really so concerned, be smart, buy an HD. No product in market today can offer better transparent data management as an HD.

LikeLike